It took a long time before Google officially told Webmasters how they handled duplicate content. They have set some pretty basic rules, saying that duplicate content will not hurt your SEO rankings as long as its not done in a malicious or spammy way. They can pretty much figure out what the original stuff was, then group the other stuff which is similar in an appropriate way.

Are You Ready To Work Your Ass Off to Earn Your Lifestyle?

Are you tired of the daily grind? With a laptop and an internet connection I built a small website to generate income, and my life completely changed. Let me show you exactly how I’ve been doing it for more than 13 years.

However, if you are trying to use duplicate content to manipulate search or browsers, you will probably run into issues.

Even if your heart is in the right place, speculations still exist about the impact of duplicate content on SEO. So what’s the real answer here?

Answer: It Depends On On A Lot of Factors, But Normally It Will Not Affect Your Website As A Whole

Under certain conditions, duplicate content can be harmful to your website’s performance on the SERPs.

When Duplicates Can Hurt Your SEO

Time and budget constraints are usually the reasons why newbies attempt to cut corners when it comes to publishing content. I hear stuff like “I don’t have the time or money to build a business.” or “Where do I get content?” The basic attitude is that they are not prepared to create value on the internet. These types of people just want to make money, without doing anything to earn it.

The lack of value is mainly what Google and other search engines are concerned about when dealing with duplicate content. Outside of obvious web spam, even though Google doesn’t penalize websites directly there are some other things to be concerned about.

URL Structure: Wasting Your Crawl Budget

Content is repeated across your website regardless of whether you intend it to do so or not. If you publish content on mywebsite.com/my-content-blog-post, you’ll likely also see that exact same text under URls like mywebsite.com/category/content-topics, as well as mywebsite.com/blog-page-3. This allows people to browse your website and discover content, so it is not a negative user experience. Search engines get that!

You just need to make their job easier by telling them which part was the ORIGINAL article, and which ones are just duplicate listings. As long as you have an SEO optimized theme, or an SEO plugin, you won’t have to think about this. They perform this “canonical” organization automatically.

That will ensure Google doesn’t waste time crawling those extra pages. We want to tell Google to crawl mywebsite.com/my-content-blog-post, but they can ignore the other areas like categories, tags, and the blog roll. If Google sends 6 little spiders to your website, you want them to look at all your pages! If they waste 5/6 spiders crawling repeat stuff from all your categories, you just wasted a large chunk of your crawl budget, meaning some things on your website might not even get indexed!

Domain Authority (Don’t Shoot Yourself In The Foot)

Publishing articles multiple times across the web will not even get you in trouble. In fact, there’s a name for that – syndication! This is a normal part of publishing, and it just means that one article may be published on multiple different websites.

This is actually considered a good user experience. If I’m a fan of Finance Blog 1, I may not be away from Finance Blog 2. Someone who published an article on Finance Blog 2 may want to allow their content to be republished to Finance Blog 1 to reach a new audience. Assuming that #1 and #2 have similar domain authority, Finance Blog 2 still gets credit for the original, and gets ranked.

The trouble is when you are a brand new site and you syndicate your content to more powerful blogs. It’s very possible that their domain authority could allow them to outrank the original! Would that be a bummer if someone else outranked you with your own content? The video below shows how this happened to a website that syndicated their content to LinkedIn.

To make sure your content is found for the right keywords, follow SEO best practices each time you publish!

Best Practices For Dealing With Duplicate Website Content

Here are some ways of dealing with various types of duplicate content on your site. Realistically, all you need is an out-of-the-box WordPress installation with a recommended SEO plugin. As long as you’ve done so, focus on creating and publishing content with originality that is good for your audience.

1. 301 Redirect For URL Changes

Setting up a 301 (permanent) redirect is great for when you are changing URLs. This could work if you are changing your website name, and want to show Google that the new site is not just a ripped off copy of the old site.

Another reason to use 301’s would be if you’re updating a post and decide to change the URL structure. To preserve the authority of that page (including all inbound links), you want to show Google you’re moving the content from mywebiste.com/old-url-is-way-too-long-and-not-properly-optimized to mywebsite.com/optimized-url. Among all the server-side solutions available to Webmasters, 301 redirect is unique because it transfers at least 90 percent of your link equity to the new page or site.

This means that your web pages will immediately not compete with one another on the SERPs because AI knows exactly which page to rank. That’s why a 301 redirect is the perfect solution for replacing any page or removing duplicates. You can also do this if you find you repeated topics, or covered multiple similar keywords.

Typically you’ll have to set this up in your ‘.htaccess’ file (for Apache web servers) but WordPress users can use a plugin.

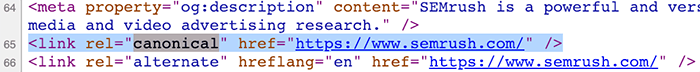

2. Canonical Tag

The canonical tag (rel=”canonical”) is another method SEO experts use to let search engines know which version of a web page they should rank.

The canonical tag is an HTML attribute and fantastic for dealing with duplicate website content. It’s automatically done for you if you’re using WordPress.

3. Link to The Original Page

Whenever search engine bots detect a link to the original web page on the copied content, they immediately know which one to rank. Most itmes, AI would already have a record of when the copy first appeared on the web, so crawlers would know which one to give authority. However, if you are publishing in multiple spots with varying authority, it’s a good idea to make sure the original gets as much link equity and credit as possible.

What About Other People Taking Your Content?

If you have been publishing for a while, then you know that there are a lot of copycats out there. If you show any sign of success, whether that be with earnings or rankings, there’s going to be a group of leeches that will attempt to repeat that success.

Some people still believe there’s a penalty for having identical content out there, but realistically, how could Google take that into account? I have no control over what people do. Those that are new to SEO use up a lot of time and effort ensuring that they deal with these copycats swiftly! Realistically, it’s not worth your time.

AI already knows that your web page existed first. That’s why content scrapers will never harm your site by copying your stuff. Oh, and by the way, this is another myth. In fact, content scrapers can help you and here’s why.

Usually, these people are too lazy to remove your internal links from the copied content. These kinds of backlinks end up passing very little link equity but you may also get a referral or two. If someone else takes your content and does not provide a link back, you can just do a DMCA takedown.

Nathaniell

What's up ladies and dudes! Great to finally meet you, and I hope you enjoyed this post. My name is Nathaniell and I'm the owner of One More Cup of Coffee. I started my first online business in 2010 promoting computer software and now I help newbies start their own businesses. Sign up for my #1 recommended training course and learn how to start your business for FREE!

Choosing A Domain & Hosting For Your Affiliate Blog

Choosing A Domain & Hosting For Your Affiliate Blog

Leave a Reply